Introduction

Artificial intelligence models are continually advancing, particularly in reasoning and coding capabilities. OpenAI’s ChatGPT o3-mini and DeepSeek’s R1 model, both launched in early 2025, have made significant impacts in the AI landscape. This article provides a comparative analysis of their technical specifications, performance metrics, and ideal use cases to assist in determining the most suitable model for various applications.

ChatGPT o3-mini: Speed and Accessibility

Innovations and Key Features

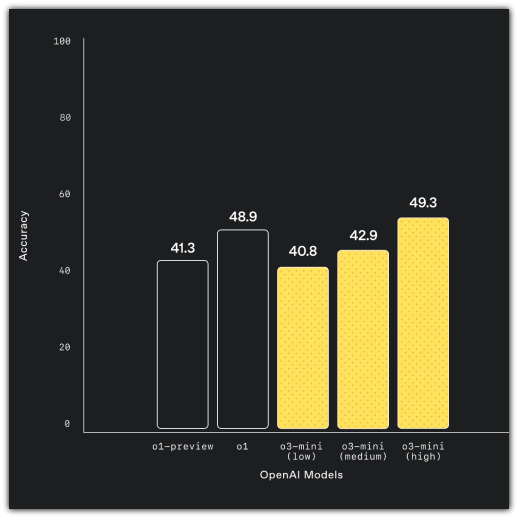

- Adjustable Reasoning Levels: Users can select from low, medium, or high reasoning depths.or instance, in a mathematical problem, high-level reasoning offers step-by-step solutions, while low-level reasoning provides direct answers.

- Integrated Web Search: Real-time data retrieval enables the handling of dynamic information such as stock prices or breaking news.

- Safety Protocols: A “Deliberative Alignment” system ensures outputs adhere to ethical and safety guidelines.

Performance Metrics

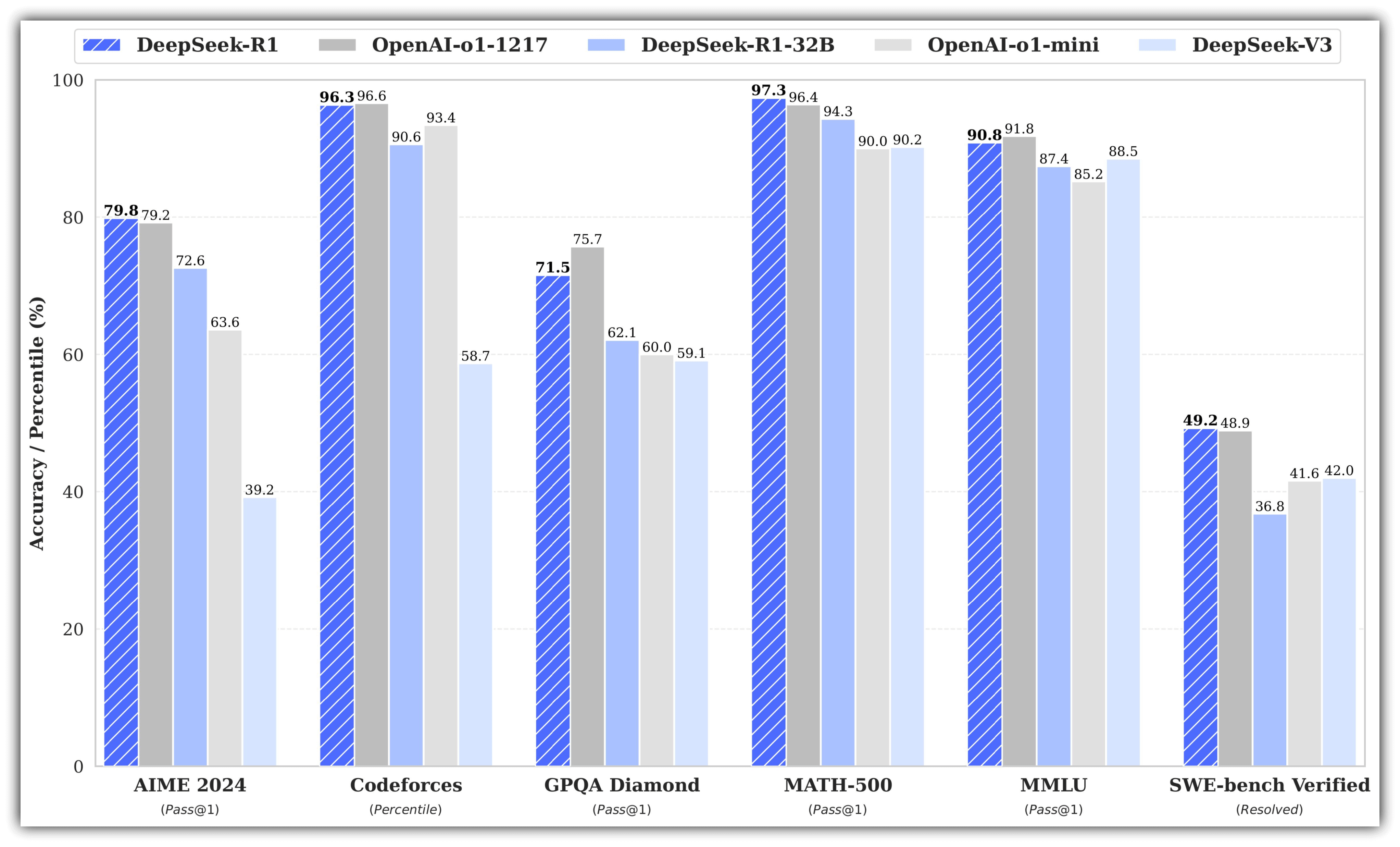

Benchmark Scores: - Mathematics (AIME 2024): 87.3% (high level). - Scientific Logic (GPQA Diamond): 79.7%. - Coding (Codeforces ELO): 2130.

- Cost: API pricing is $0.55 per million tokens for bulk processing, making it 63% more cost-effective than previous models.

DeepSeek R1: Open-Source and Transparent Analysis

DeepSeek R1 offers open-source advantages for researchers and developers.

Standout Features

- Fully Transparent Reasoning: Provides step-by-step insights into its decision-making process. For example, when debugging code, it explains which lines it checks and why.

- Open-Source and Customizable: The model’s internal architecture can be modified, making it ideal for researchers working with proprietary datasets.

- Training Efficiency: Trained at 20-40 times lower cost compared to OpenAI’s GPT-4, using 2,000 Nvidia H800 GPUs over 55 days.

Performance Metrics

- Benchmark Scores:

- Mathematics (AIME 2024): 79.8%

- Test of Human-Level Exams: 9.4% (OpenAI’s deep research model scored 26%).

- Coding (SWE-bench): 49.2%.

Performance Comparison: Details and Cost Analysis

1. Reasoning and Problem-Solving

- Complex Analytical Tasks: DeepSeek R1 excels in multi-step logic puzzles, achieving 5-10% higher accuracy in competitive programming questions.

- Daily Use: ChatGPT o3-mini shines with fast response times (average 210ms) and a user-friendly interface.

2. Coding and Technical Applications

| Feature | ChatGPT o3-mini | DeepSeek R1 |

|---|---|---|

| Debugging | Provides basic suggestions | Analyzes code line by line |

| Optimization | Offers general improvements | Focuses on memory usage and time complexity |

3. Cost and Accessibility

- ChatGPT o3-mini: Free users can access up to 150 messages per day. Pro subscribers enjoy unlimited access and priority API support.

- DeepSeek R1: Free for local deployment but requires high GPU resources (minimum 48GB VRAM). Cloud API costs $0.55 per million tokens.

Use Cases: Where Each Model Shines

Ideal Scenarios for ChatGPT o3-mini

- Education: Step-by-step guidance for students solving math problems.

- Customer Support: Optimized chatbots for instant language translation and troubleshooting.

- Content Creation: SEO-friendly blog drafts or social media posts.

Ideal Scenarios for DeepSeek R1

- Scientific Research: Simulating complex datasets (e.g., climate projections).

- Software Development: Automating codebase audits and generating technical documentation.

- Financial Analysis: Causal analysis of stock market trends.

Technical Specifications: Architecture and Training

| Feature | ChatGPT o3-mini | DeepSeek R1 |

|---|---|---|

| Architecture | Dense Transformer | Mixture-of-Experts (MoE) + RLHF |

| Parameter Count | ~200 Billion | 671 Billion |

| Context Window | 200K tokens (100K max output) | 128K tokens |

| Training Hardware | 1.2M A100 GPU-hours | 2.664M H800 GPU-hours |

| API Providers The providers that offer this model. (This is not an exhaustive list.) | OpenAI API | DeepSeek, HuggingFace |

Conclusion: Which Model Should You Choose?

- Choose ChatGPT o3-mini If:

- You need fast, ready-to-use solutions. - you require STEM education support or daily technical assistance.

- Choose DeepSeek R1 If:

- You want to customize the model’s internal workings.

- You’re conducting academic research or industrial-scale code analysis.

- The Future of the Market: OpenAI is responding to DeepSeek’s open-source move with free trials, while giants like Microsoft and Nvidia are integrating DeepSeek R1 into their cloud platforms. Competition is expected to drive costs down and accessibility up.

SEO Keywords: artificial intelligence, ChatGPT O3, DeepSeek R1, AI comparison, image analysis, natural language processing, deep learning, video analysis